Engineering the next generation of AI

Research, engineering deep-dives, and practical guides for AI builders.

Solving long context for data agents

How to build data-intensive agents that avoid context window overflow using memory pointers.

How Claude became our Project Manager

How we went from ~4–6 hours of weekly project management overhead to about 1 hour by turning Claude into our team's Project Manager.

Lessons from shipping AI agents into data-centric SaaS products

What I've learned about shipping AI agents into data-centric SaaS products and getting product teams to embrace evaluations.

Vibescoping 101: LLM-assisted product scoping

Practical guide with prompts, templates, and a full walkthrough of our AI-assisted scoping workflow.

Is your test data holding back your AI agents?

Discover how flawed test data quietly sabotages AI agent development. Learn strategies for building robust, real-world test sets.

Advancing Long-Context LLM Performance in 2025

Exploring training-free innovations—Infinite Retrieval and Cascading KV Cache—that rethink how LLMs process vast inputs.

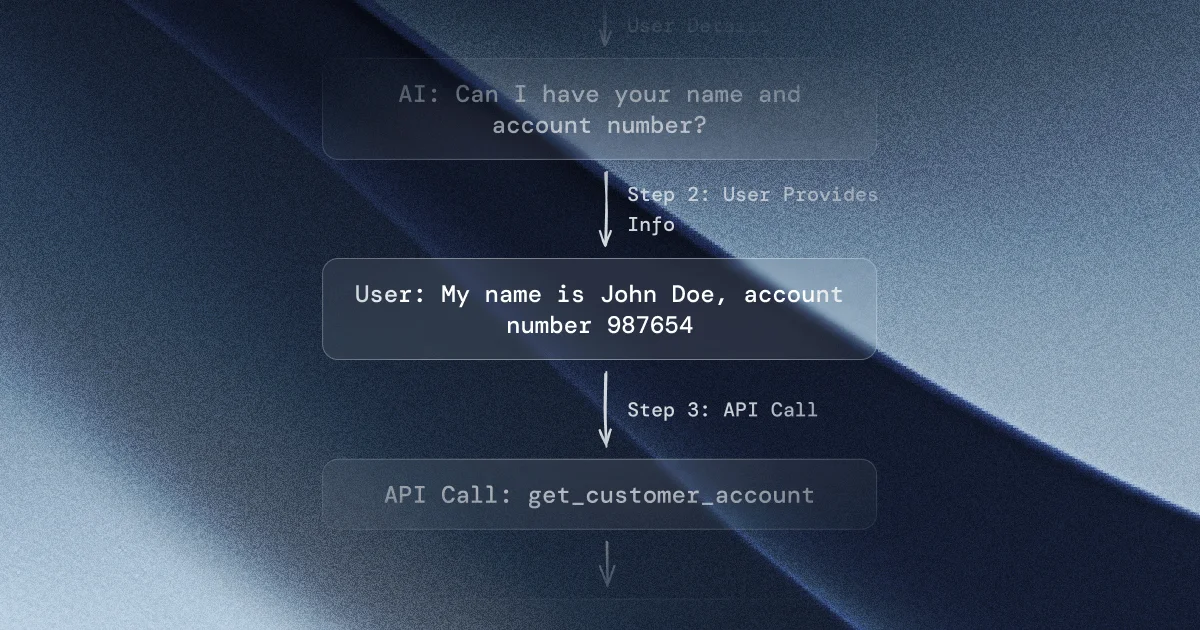

Automating Test Set Creation for Conversational AI Agents

We explore how structured evaluation pipelines can improve AI agent reliability, highlighting recent research from Zendesk.

Blueprint for tool-augmented LLMs: Learning from Salesforce's APIGen approach

We explore how standard JSON, combined with function-call generation and verification, helps AI engineers build more reliable, verifiable, and varied function-calling datasets.

Phi-4 as an LLM Evaluator: Benchmarking and Fine-Tuning Experiments

We explored how Phi-4, Microsoft's latest 14B parameter small language model, performs as an evaluator for LLM systems.

Flow Judge: An Open Small Language Model for LLM System Evaluations

Read our in-depth technical report on Flow Judge, an open-source, small-scale (3.8B) language model optimized for efficient and customizable LLM system evaluations.

Self-Taught Evaluators Paper by Meta AI — Explained by AI Engineer

This research paper introduces a method to improve language model evaluators using only synthetic training data, which makes it extremely scalable and efficient.

MAGPIE Explained: Alignment Data Synthesis from Scratch by Prompting Aligned LLMs with Nothing

MAGPIE tackles two major pain points in current open data creation methods: making synthetic data generation scalable and reserving data diversity at scale.

Exploring the potential of open(-source) LLMs

We explore why companies initially opt for closed-source LLMs like GPT-4 and discuss the growing shift towards open LLMs.

From Data Analyst to AI Engineer (in 2024)

Discover Tiina's motivations, the skills she developed, and the challenges she faced while transitioning from data analytics consultant to an AI engineering role.

Improving LLM systems with A/B testing

By tying LLM performance to business metrics and leveraging real-world user feedback, A/B testing can help AI teams create more effective LLM systems.

Introduction to Model Merging

How can we make LLMs more versatile without extensive training? Model merging allows us to combine the strengths of multiple AI models into a single, more powerful model.

Prometheus 2 Explained: Open-Source Language Model Specialized in Evaluating Other Language Models

The Prometheus 2 paper introduces a new SOTA open LLM for evaluations that has high correlation with human evaluators and leading closed-source models.

LLM evaluation (2/2) — Harnessing LLMs for evaluating text generation

Evaluating LLM systems is a complex but essential task. By combining LLM-as-a-judge and human evaluation, AI teams can make it more reliable and scalable.

LLM evaluation (1/2) — Overcoming evaluation challenges in text generation

Evaluating LLM systems, particularly for text generation tasks, presents significant challenges due to the open-ended nature of outputs and the limitations of current evaluation methods.

Dataset Engineering for LLM Finetuning

Our lessons on fine-tuning LLMs and how we utilise dataset engineering.

Introduction to Prompt Engineering

What we learned about prompt engineering by building a SaaS product on top of LLMs.