If you're reading this post, you likely have a handle on what AI agents are – conceptually at least. Perhaps you've even built a few yourself by now.

There's plenty of information online about building good, reliable agents – progress is definitely being made even if we're still figuring many things out. Yet, relatively few discussions seem to focus on what I believe is the most crucial component for efficient and safe AI agent development: high-quality test data.

Relying on flawed test data isn't just sub-optimal; it can actively undermine your development efforts and lead to agents that miserably fail in production.

This isn't a new problem, nor is it unique to AI agents. It's vital for almost any AI system, from a simple logistic regression model, through a vanilla RAG system, up to an autonomous agent. Yet, I've seen many teams trying to develop sophisticated agents still leaning on bad quality test data, creating a foundation built on sand.

The illusion of progress: When Evaluation-Driven Development goes wrong

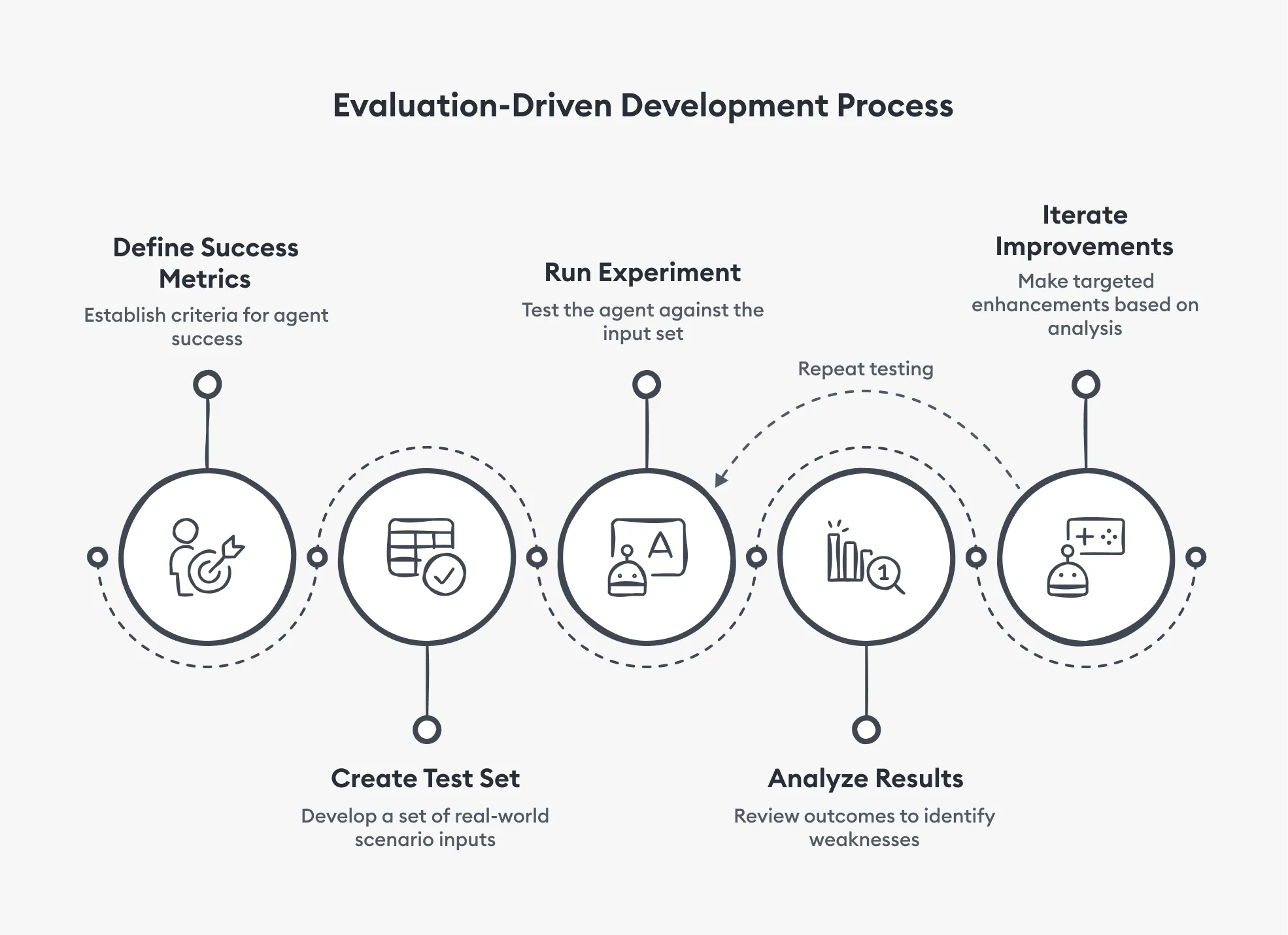

Evaluation-Driven Development (EDD) is an extension of Test-Driven Development (TDD) for the LLM era. It has emerged as a crucial methodology for navigating the complexities of building non-deterministic, multi-component generative AI systems like agents.

EDD should be a powerful iterative cycle:

- Define "good": Before you get too deep into building your agent, start by defining what success looks like for the specific agent and business requirements. This includes quality metrics, adherence to rules, and so on.

- Create your test set: Curate or generate a set of inputs mirroring the real-world scenarios your agent will face. It's also recommended to create expected outputs is possible. This is where things often go wrong!

- Run an experiment: Run the initial version of your agent against the test set, and measure its performance using the metrics you defined.

- Analyze and iterate: Examine the results, especially the failures. Try to identify weaknesses (prompting, retrieval, tool use, model choice) and make targeted improvements to your agent - maybe refine the prompt, swap out an LLM, add more examples, improve a tool's description, or adjust the retrieval strategy.

- Repeat: Test the new, hopefully improved, version of your agent against the exact same test set. Did the metrics improve? Did you fix the previous failures? Did you introduce regressions? This cycle repeats – evaluate, analyze, iterate – continuously improving the agent based on concrete evidence from your test data.

You can see that EDD is fundamentally powered by test cases. The entire process hinges on Step 2!! If your test set is flawed, the EDD cycle results in:

- Optimisation of the wrong things: You diligently "improve" your agent based on data that doesn't reflect reality. You might optimize for trivial cases or scenarios your users never encounter--the "easy ones". This creates an illusion of progress. Offline evals improve, but performance in production (online evals) stagnates or even degrades with the "improved" agent.

- Post-deployment failures: The gaps in your test data – the unrepresented user behaviours, the missing edge cases, the ignored constraints – inevitably surface after deployment. This leads to public failures, erosion of user trust, and costly emergency patches. You're essentially beta-testing on your live users.

- Wasted resources: Engineering hours are burned chasing phantom improvements dictated by bad data or fixing predictable production fires that a robust test set would have flagged during development. I can tell you this cost isn't just computational; it's a massive drain on your team's time and morale.

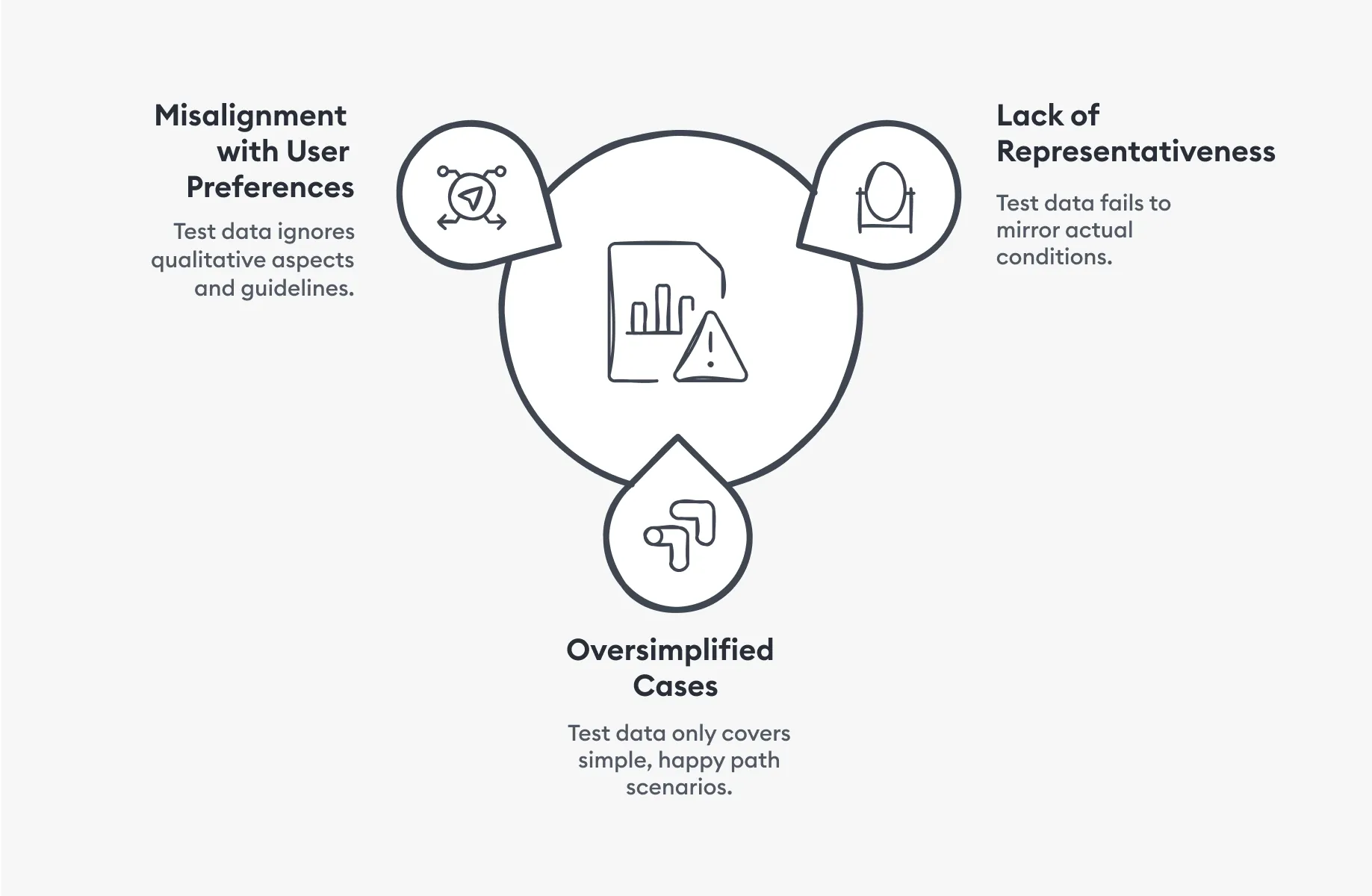

The characteristics of bad test data

Understanding the typical shortcomings of poor test sets is the first step towards building great ones. From my experience, these are the key characteristics to watch out for:

- Misaligned with user preferences and guidelines: Your test data focuses solely on correctness or task completion, ignoring crucial qualitative aspects or operational rules. It doesn't evaluate adherence to a specific brand voice or communication style. It fails to check if the agent consistently follows operational rules, ethical guidelines, or company policies. It ignores implicit user preferences for tone, conciseness, or interaction flow.

- Lack of representativeness: Your test data fails to mirror the actual conditions your agent encounters in production. It lacks domain-specific terminology, scenarios, or knowledge. It doesn't reflect the language patterns, common queries, or potential misunderstandings of your unique use case.

- Oversimplified cases: Your test data only covers the "happy path" or simple cases, ignoring the messy reality of diverse inputs and potential failures. It lacks challenging edge cases, tests for handling ambiguity or errors, complex multi-turn interactions, or scenarios designed to probe specific failure modes (e.g., hallucination triggers, tool misuse). Test cases might be too simplistic, leaving many required capabilities untested.

Why do AI teams keep struggling with flawed test data?

At this point, you might be asking yourself: if high-quality test data is so critical, why do so many AI teams keep using flawed test sets, or even neglect creating them altogether in the worst cases? The answer is that building and maintaining test data is hard and time-consuming. It's often a manual process demanding significant cognitive effort and close collaboration between engineers and domain experts.

Cold start

I've heard the following many times:

We've been asked to build an agent for X. We don't know exactly how users will interact with it or the types of queries it will receive. How can we create our first test set before the agent is deployed and generating data?

This is the classic cold start problem. You might be at the very beginning, or perhaps you've only prototyped an agent that "runs". How do you create good test data from scratch?

One common approach is manual curation by domain experts. Their knowledge can be invaluable for injecting real-world relevance into the test set creation process. However, tasking experts to create test cases entirely from scratch is often sub-optimal. It's an extremely slow process, your experts are usually busy with other critical tasks, humans have a natural bias towards oversimplifying complex scenarios, and anticipating all the crucial edge cases is challenging.

An alternative employed by many engineers is synthetic data generation. This approach promises to speed things up significantly but introduces its own set of hurdles. You essentially need to build and maintain another complex system just to generate test data. Ensuring this synthetic data is realistic, diverse, and truly representative requires careful pipeline design and expert supervision and validation loops, not to mention setting up the necessary infrastructure. While faster, it's far from being a free lunch.

For more on how structured evaluation pipelines can automate test set creation, check out our blog post on automating test set creation.

Automating Test Set Creation for Conversational AI Agents

We explore how structured evaluation pipelines can improve AI agent reliability, highlighting recent research from Zendesk.

A third avenue, particularly if you've followed best practices and have instrumented your code with tools like Opik or Phoenix, involves creating initial interaction traces. Setting up a simple internal UI allows your team to interact with the agent and gather data. While this streamlines collection compared to generating data from absolute scratch, early agent versions often exhibit unwanted behaviours. The resulting traces will still require significant editing and validation before becoming reliable test cases.

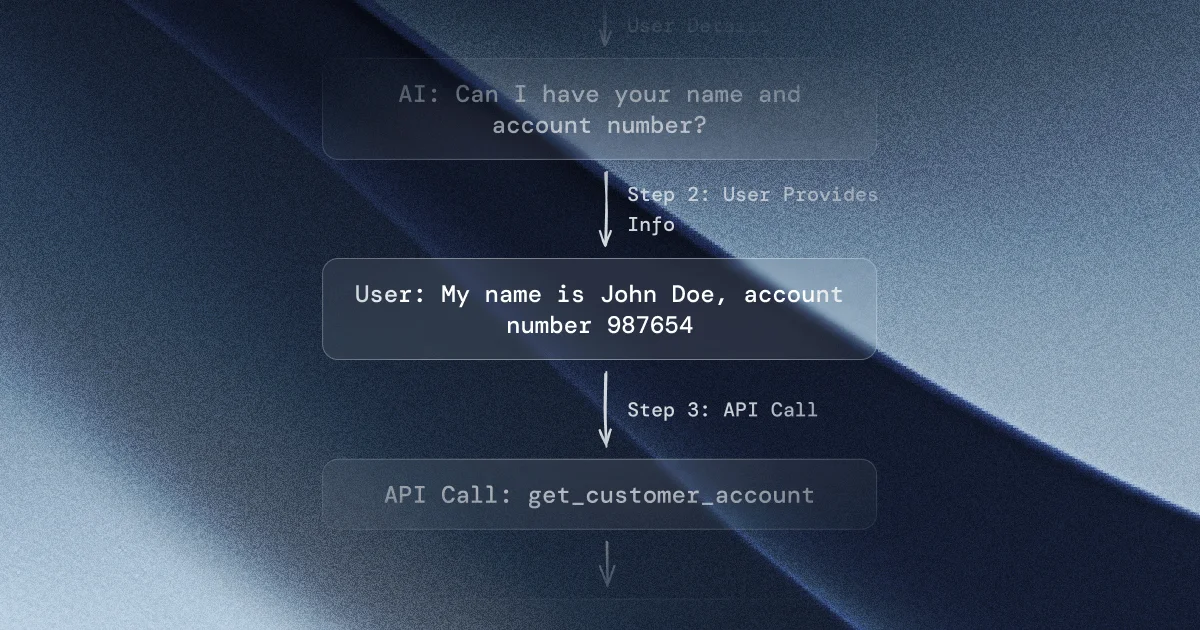

Leveraging production traces

Okay, so what if your agent is already in production? You should leverage real user interactions, which are invaluable. Observability tools can help collect diverse inputs and traces from production. Yet, as we already know, turning this raw real-world data into a high-quality test set isn't automatic either:

- It often requires engineers or domain experts to visually inspect hundreds of traces to curate representative examples and identify problematic cases worth adding to the test sets.

- Found a critical edge case you hadn't considered? You'll need to review more traces to find similar instances. If it appears rarely in your logs, you'll likely need to augment it manually or synthetically generate similar variations to ensure your test set adequately covers the scenario without being skewed by just one or two examples.

- Crucially, for many evaluation metrics, you still need to create the ideal output (the "ground truth"). Agent responses captured in production logs might not be perfect, specially in the initial versions of your agent, and will often require editing and refinement to represent the desired behaviour you want to test against.

Test data maintenance

You may be thinking: once this initial, painful process of creating a comprehensive test set is cleared, the worst is over. I'm sorry to say that's far from reality.

A test set should not be treated as a static artifact. It's a living asset requiring continuous updates to remain relevant and effective. As your agent evolves and new patterns emerge in production, your test data must evolve alongside it.

You must regularly update it to:

- Cover new agent functionality (e.g., added tools, refined rules).

- Incorporate newly discovered failure modes.

- Fix inconsistencies or errors found in existing test cases.

Furthermore:

- Are you observing shifts in production data (e.g., users asking different types of questions, using new jargon, interacting in unexpected ways)? This distribution shift means your test set needs updating to reflect the new reality, otherwise your evaluations become misleading.

- Have user preferences or business requirements changed (e.g., needing a different tone, stricter adherence to new policies)? Your test set needs realignment to ensure the agent is evaluated against current expectations.

As you can imagine, these continuous updates demand significant, ongoing manual effort.

The root cause of these pitfalls

Given these ongoing hurdles – from the initial cold start challenges to the continuous burden of maintenance – it becomes clearer why corners are sometimes cut. Relying on easily accessible but unrepresentative test cases, or settling for static, "good enough" test sets that quickly become stale, seems like a tempting shortcut.

However, this shortcut directly leads back to the flawed optimization cycles, unexpected post-deployment failures, wasted resources, and the erosion of user trust. Investing in robust, representative, and well-maintained test data isn't just best practice; it's fundamental to building AI agents that actually succeed in the long term.

Empower your AI agents with Flow AI

The challenges we've discussed – the cold start problem, the burden of maintenance and the risk of optimizing for the wrong things – are why we are building Flow AI. We believe that the creation of robust and reliable AI agents shouldn't be hindered by the difficulty of creating and managing high-quality test data.

We're building a test data engine to streamline the creation and continuous evolution of test cases. Our approach focuses on grounding these tests in your specific knowledge and production reality, discovering critical edge cases upfront, and empowering your domain experts by letting them focus on high-impact validation, not tedious creation.

We're looking for AI teams who feel this pain acutely and are interested in collaborating with us. If you're ready to explore a more efficient path forward, let's connect!